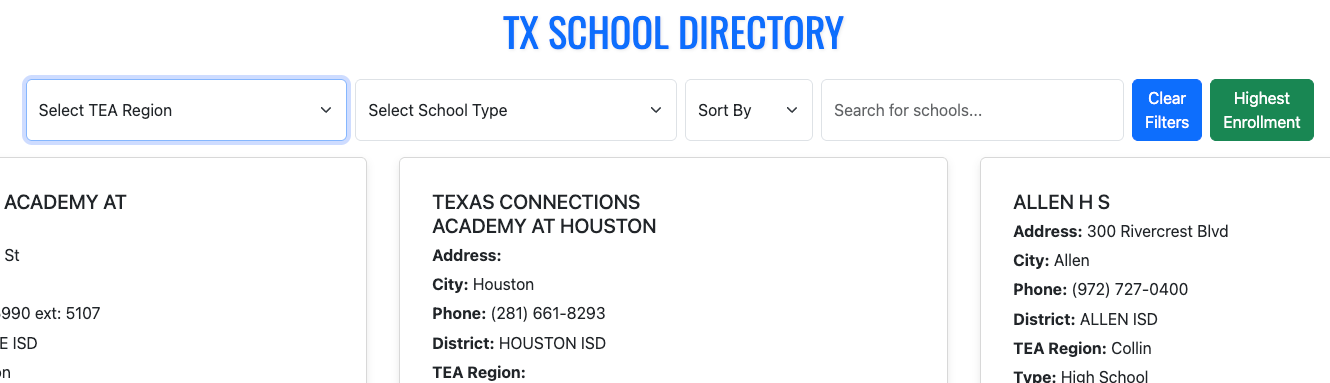

5240 Harvesting, Storing and Retrieving Data (aka A Google Cloud Course!)

The class 5240 Harvesting, Storing and Retrieving Data began with a section that was a crash course in Big Data (Structured, Unstructured, Semistructured, the 5 V’s of big data (Volume, Variety, Veracity, Velocity, Value), etc.) It quickly pivoted to primarily focusing on utilizing the Google Cloud Platform data analytics (BigQuery) and database (BigTable, Spanner and Cloud SQL) tools.

It was a solid mix of theory and practice. In fact, the midterm consisted of both a theory portion and a hands-on lab portion. It provided an introduction to collecting, storing, managing, retrieving and processing datasets. Techniques for large and small datasets were considered, as both are needed in data science applications. Traditional survey and experimental design principles for data collection as well as script-based programming techniques for large-scale data harvesting from third party sources were covered. Data wrangling methodologies were introduced for cleaning and merging datasets, storing data for later analysis and constructing derived datasets. Various storage and process architectures were introduced with a focus on how approaches depend on applications, data velocity and end users. Emphasizes applications and includes many hands-on projects.

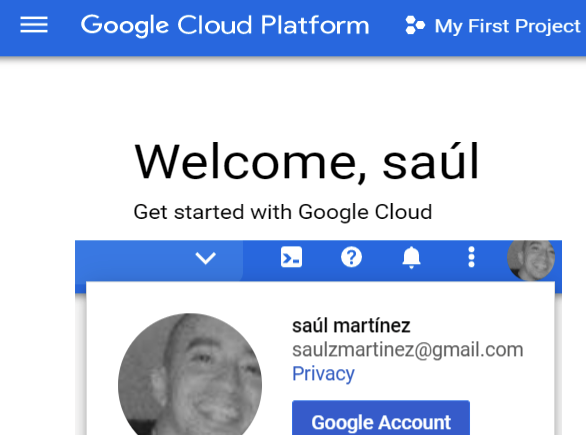

A few of the tasks were particularly overwhelming since I had never utilized the Google Cloud Platform and it was critical to keep tabs of your usage, etc. Although the tools were similar to other data analtyics and data science tools, the proprietary nature of the processes and click throughs to complete a task were sometimes not very intuitive. So, all that to say that there was a bit of a learning curve. However, The professor of the course was super helpful and provided step by step guidance as needed.

Tools Utilized: Google Cloud Platform (BigQuery, Bigtable, Cloud SQL, Cloud Spanner)

Skills Acquired: Collect, store, manage, retrieve, process data sets utilizing the Google Cloud Platform.